Penumbra is a fully private proof-of-stake network and decentralized exchange for the Cosmos ecosystem.

Penumbra integrates privacy with proof-of-stake through a novel private delegation mechanism that provides staking derivatives, tax-efficient staking, and on-chain governance with private voting. Penumbra connects to the Cosmos ecosystem via IBC, acting as an ecosystem-wide shielded pool and allowing private transactions in any IBC-compatible asset. Users can also swap these assets using ZSwap, a private decentralized exchange supporting sealed-bid batch auctions and Uniswap-v3-style concentrated liquidity. Sealed-bid batch auctions prevent frontrunning, provide better execution, and reveal only the net flow across a pair of assets in each block, and liquidity positions are created anonymously, allowing traders to approximate their desired trading function without revealing their individual beliefs about prices.

This website renders the work-in-progress protocol specification for Penumbra.

Press s or use the magnifying glass icon for full-text search.

If you’re interested in technical discussion about the project, why not

- join the discord,

- check out the repo and issue tracker,

- view the roadmap goals,

- or follow the project on Twitter for updates.

Private Transactions

Penumbra records all value in a single multi-asset shielded pool based on the Zcash Sapling design, but allows private transactions in any kind of IBC asset. Inbound IBC transfers shield value as it moves into the zone, while outbound IBC transfers unshield value.

Unlike Zcash, Penumbra has no notion of transparent transactions or a

transparent value pool; instead, inbound IBC transfers are analogous to t2z

Zcash transactions, outbound IBC transfers are analogous to z2t Zcash

transactions, and the entire Cosmos ecosystem functions analogously to

Zcash’s transparent pool.

Unlike the Cosmos Hub or many other chains built on the Cosmos SDK, Penumbra has no notion of accounts. Only validators have any kind of long-term identity, and this identity is only used (in the context of transactions) for spending the validator’s commission.

Private Staking

In a proof-of-stake system like the Cosmos Hub, stakeholders delegate staking tokens by bonding them to validators. Validators participate in Tendermint consensus with voting power determined by their delegation size, and delegators receive staking rewards in exchange for taking on the risk of being penalized for validator misbehavior (slashing).

Integrating privacy and proof of stake poses significant challenges. If delegations are public, holders of the staking token must choose between privacy on the one hand and staking rewards and participation in consensus on the other hand. Because the majority of the stake will be bonded to validators, privacy becomes an uncommon, opt-in case. But if delegations are private, issuing staking rewards becomes very difficult, because the chain no longer knows the amount and duration of each address’ delegations.

Penumbra sidesteps this problem using a new mechanism that eliminates staking rewards entirely, treating unbonded and bonded stake as separate assets, with an epoch-varying exchange rate that prices in what would be a staking reward in other systems. This mechanism ensures that all delegations to a particular validator are fungible, and can be represented by a single delegation token representing a share of that validator’s delegation pool, in effect a first-class staking derivative. Although delegation fungibility is key to enabling privacy, as a side effect, delegators do not realize any income while their stake is bonded, only a capital gain (or loss) on unbonding.

The total amount of stake bonded to each validator is part of the public chain state and determines consensus weight, but the delegation tokens themselves are each just another token to be recorded in a multi-asset shielded pool. This provides accountability for validators and privacy and flexibility for delegators, who can trade and transact with their delegation tokens just like they can with any other token.

It also provides an alternate perspective on the debate between fixed-supply and inflation-based rewards. Choosing the unbonded token as the numéraire, delegators are rewarded by inflation for taking on the risk of validator misbehavior, and the token supply grows over time. Choosing (a basket of) delegation tokens as the numéraire, non-delegators are punished by depreciation for not taking on any risk of misbehavior, and the token supply is fixed.

Private Governance

Like the Cosmos Hub, Penumbra supports on-chain governance with delegated voting. Unlike the Cosmos Hub, Penumbra’s governance mechanism supports secret ballots. Penumbra users can anonymously propose votes by escrowing a deposit of bonded stake. Stakeholders vote by proving ownership of their bonded stake prior to the beginning of the voting period and encrypting their votes to a threshold key controlled by the validator set. Validators sum encrypted votes and decrypt only the per-epoch totals.

Private DEX

Penumbra provides private batch swaps using ZSwap. ZSwap allows users to privately swap between any pair of assets. Individual swaps publicly burn their input notes and privately mint their output notes. All swaps in each block are executed in a single batch. Users can also provide liquidity by anonymously creating concentrated liquidity positions. These positions reveal the amount of liquidity and the bounds in which it is concentrated, but are not otherwise linked to any identity, so that (with some care) users can privately approximate arbitrary trading functions without revealing their specific views about prices.

Concepts and Mechanisms

This section provides an overview of the concepts involved in Penumbra’s mechanism design.

Validators

Validators in Penumbra undergo various transitions depending on chain activity.

┌────────────────────────────────────────────────────────────────────────────┐

│ │

│ ┌ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ │

│ Genesis Validator │ │

│ │ ┏━━━━━━━━━━━━━━━━━━━━━━━┓ │

│ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ┘ ┃ Tombstoned ┃ │

│ │ ┌──────▶┃ (Misbehavior) ┃ │

│ │ │ ┗━━━━━━━━━━━━━━━━━━━━━━━┛ │

│ │ │ │

┌ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ │ ▼ │ ▼

Validator │ ┏━━━━━━━━━┓ ╔══════╗ │ ┏━━━━━━━━━━━┓

│ Definition ──────▶┃Inactive ┃─────────────▶║Active║───────┼────────────────────────────────┬─▶┃ Disabled ┃

(in transaction) │ ┗━━━━━━━━━┛ ╚══════╝ │ │ ┗━━━━━━━━━━━┛

└ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ▲ │ ┏━━━━━━━━━━━━━━━━━━━━━┓ │ │

│ └──────▶┃ Jailed (Inactivity) ┃──┘ │

│ ┗━━━━━━━━━━━━━━━━━━━━━┛ │

│ │ │

└─────────────────────────────────────────────────────┴──────────────────────┘

Single lines represent unbonded stake, and double lines represent bonded stake.

Validators become known to the chain either at genesis, or by means of a transaction with a ValidatorDefinition action in them. Validators transition through five states:

- Inactive: a validator whose delegation pool is too small to participate in consensus set

- Active: a validator whose delegation pool is large enough to participate in consensus and must meet uptime requirements

- Jailed: a validator that has been slashed and removed from consensus for downtime, that may return later

- Tombstoned: a validator that has been permanently slashed and removed from consensus for byzantine misbehavior and may not return

- Disabled: a validator that has been manually disabled by the operator, that may return to

Inactivelater

Validators specified in the genesis config begin in the active state, with whatever stake was allocated to their delegation pool at genesis. Otherwise, new validators begin in the inactive state, with no stake in their delegation pool. At this point, the validator is known to the chain, and stake can be contributed to its delegation pool. Stake contributed to an inactive validator’s delegation pool does not earn rewards (the validator’s rates are held constant), but it is also not bonded, so undelegations are effective immediately, with no unbonding period and no output quarantine.

The chain chooses a validator limit N as a consensus parameter. When a validator’s delegation pool (a) has a nonzero balance and (b) its (voting-power-adjusted) size is in the top N validators, it moves into the active state during the next epoch transition. Active validators participate in consensus, and are communicated to Tendermint. Stake contributed to an active validator’s delegation pool earns rewards (the validator’s rates are updated at each epoch to track the rewards accruing to the pool). That stake is bonded, so undelegations have an unbonding period and an output quarantine. An active validator can exit the consensus set in four ways.

First, the validator could be jailed and slashed for inactivity. This can happen in any block, triggering an unscheduled epoch transition. Jailed validators are immediately removed from the consensus set. The validator’s rates are updated to price in the slashing penalty, and are then held constant. Validators jailed for inactivity are not permanently prohibited from participation in consensus, and their operators can re-activate them by re-uploading the validator definition. Stake cannot be delegated to a slashed validator. Stake already contributed to a slashed validator’s delegation pool will enter an unbonding period to hold the validator accountable for any byzantine behavior during the unbonding period. Re-delegations may occur after the validator enters the “Inactive” state.

Second, the validator could be tombstoned and slashed for byzantine misbehavior. This can happen in any block, triggering an unscheduled epoch transition. Tombstoned validators are immediately removed from the consensus set. Any pending undelegations from a slashed validator are cancelled: the quarantined output notes are deleted, and the quarantined nullifiers are removed from the nullifier set. The validator’s rates are updated to price in the slashing penalty, and are then held constant. Tombstoned validators are permanently prohibited from participation in consensus (though their operators can create new identity keys, if they’d like to). Stake cannot be delegated to a tombstoned validator. Stake already contributed to a tombstoned validator’s delegation pool is not bonded (the validator has already been slashed and tombstoned), so undelegations are effective immediately, with no unbonding period and no quarantine.

Third, the validator could be manually disabled by the operator. The validator is then in the disabled state. It does not participate in consensus, and the stake in its delegation pool does not earn rewards (the validator’s rates are held constant). The stake in its delegation pool will enter an unbonding period at the time the validator becomes disabled. The only valid state a disabled validator may enter into is “inactive”, if the operator re-activates it by updating the validator definition.

Fourth, the validator could be displaced from the validator set by another validator with more stake in its delegation pool. The validator is then in the inactive state. It does not participate in consensus, and the stake in its delegation pool does not earn rewards (the validator’s rates are held constant). The stake in its delegation pool will enter an unbonding period at the time the validator becomes inactive. Inactive validators have three possible state transitions:

- they can become active again, if new delegations boost its weight back into the top N;

- they can be tombstoned, if evidence of misbehavior arises during the unbonding period;

- they can be disabled, if the operator chooses.

If (2) occurs, the same state transitions as in regular tombstoning occur: all pending undelegations are cancelled, etc. If (3) occurs, the unbonding period continues and the validator enters the disabled state. If (1) occurs, the validator stops unbonding, and all delegations become bonded again.

Batching Flows

Penumbra’s ledger records value as it moves between different economic roles – for instance, movement between unbonded stake and delegation tokens, movement between different assets as they are traded, etc. This creates a tension between the need to reveal the total amount of value in each role as part of the public chain state, and the desire to shield value amounts in individual transactions.

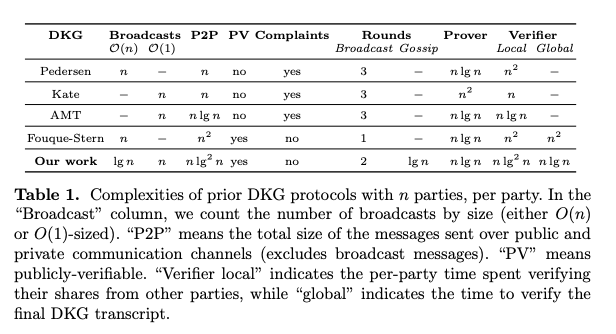

To address this tension, Penumbra provides a mechanism to aggregate value flows across a batch of transactions, revealing only the total amount and not the value contributed by each individual transaction. This mechanism is built using an integer-valued homomorphic encryption scheme that supports threshold decryption, so that the network’s validators can jointly control a decryption key.

Transactions that contribute value to a batch contain an encryption of the amount. To flush the batch, the validators sum the ciphertexts from all relevant transactions to compute an encryption of the batch total, then jointly decrypt it and commit it to the chain.

This mechanism doesn’t require any coordination between the users whose transactions are batched, but it does require that the validators create and publish a threshold decryption key. To allow batching across block boundaries, Penumbra organizes blocks into epochs, and applies changes to the validator set only at epoch boundaries. Decryption keys live for the duration of the epoch, allowing value flows to be batched over any time interval from 1 block up to the length of an epoch. We propose epoch boundaries on the order of 1-3 days.

At the beginning of each epoch, the validator set performs distributed key generation to produce a decryption key jointly controlled by the validators (on an approximately stake-weighted basis) and includes the encryption key in the first block of the epoch.

Because this key is only available after the first block of each epoch, some transactions cannot occur in the first block itself. Assuming a block interval similar to the Cosmos Hub, this implies an ~8-second processing delay once per day, a reasonable tradeoff against the complexity of phased setup procedures.

Addresses and Keys

Value transferred on Penumbra is sent to shielded payment addresses; these addresses are derived from spending keys through a sequence of intermediate keys that represent different levels of attenuated capability:

flowchart BT

subgraph Address

end;

subgraph DTK[Detection Key]

end;

subgraph IVK[Incoming Viewing Key]

end;

subgraph OVK[Outgoing Viewing Key]

end;

subgraph FVK[Full Viewing Key]

end;

subgraph SK[Spending Key]

end;

subgraph SeedPhrase[Seed Phrase]

end;

index(address index);

div{ };

SeedPhrase --> SK;

SK --> FVK;

FVK --> OVK;

FVK --> IVK;

index --> div;

IVK ----> div;

div --> Address & DTK;

DTK --> Address;

From bottom to top:

- the seed phrase is the root key material. Multiple wallets - each with separate spend authority - can be derived from this root seed phrase.

- the spending key is the capability representing spending authority for a given wallet;

- the full viewing key represents the capability to view all transactions related to the wallet;

- the outgoing viewing key represents the capability to view only outgoing transactions, and is used to recover information about previously sent transactions;

- the incoming viewing key represents the capability to view only incoming transactions, and is used to scan the block chain for incoming transactions.

Penumbra allows the same account to present multiple, publicly unlinkable addresses, keyed by an 16-byte address index. Each choice of address index gives a distinct shielded payment address. Because these addresses share a common incoming viewing key, the cost of scanning the blockchain does not increase with the number of addresses in use.

Finally, Penumbra also allows outsourcing probabilistic transaction detection to third parties using fuzzy message detection. Each address has a detection key; a third party can use this key to detect transactions that might be relevant to that key. Like a Bloom filter, this detection has false positives but no false negatives, so detection will find all relevant transactions, as well as some amount of unrelated cover traffic. Unlike incoming viewing keys, detection keys are not shared between diversified addresses, allowing fine-grained control of delegation.

This diagram shows only the user-visible parts of the key hierarchy. Internally, each of these keys has different components, described in detail in the Addresses and Keys chapter.

Privacy Implications

Users should be aware that giving out multiple detection keys to a detection entity can carry a subset of the privacy implications, described in Addresses and Detection Keys.

Assets and Amounts

Penumbra’s shielded pool can record arbitrary assets. To be precise, we define:

- an amount to be an untyped quantity of some asset;

- an asset type to be an ADR001-style denomination trace uniquely identifying a cross-chain asset and its provenance, such as:

denom(native chain A asset)transfer/channelToA/denom(chain B representation of chain A asset)transfer/channelToB/transfer/channelToA/denom(chain C representation of chain B representation of chain A asset)

- an asset ID to be a fixed-size hash of an asset type;

- a value to be a typed quantity, i.e., an amount and an asset id.

Penumbra deviates slightly from ADR001 in the definition of the asset ID. While ADR001 defines the IBC asset ID as the SHA-256 hash of the denomination trace, Penumbra hashes to a field element, so that asset IDs can be more easily used inside of a zk-SNARK circuit.

Notes, Nullifiers, and Trees

Transparent blockchains operate as follows: all participants maintain a copy of the (consensus-determined) application state. Transactions modify the application state directly, and participants check that the state changes are allowed by the application rules before coming to consensus on them.

On a shielded blockchain like Penumbra, however, the state is fragmented across all users of the application, as each user has a view only of their “local” portion of the application state recording their funds. Transactions update a user’s state fragments privately, and use a zero-knowledge proof to prove to all other participants that the update was allowed by the application rules.

Penumbra’s transaction model is derived from the Zcash shielded transaction design, with modifications to support multiple asset types, and several extensions to support additional functionality. The Zcash model is in turn derived from Bitcoin’s unspent transaction output (UTXO) model, in which value is recorded in transaction outputs that record the conditions under which they can be spent.

In Penumbra, value is recorded in notes, which function similarly to UTXOs. Each note specifies (either directly or indirectly) a type of value, an amount of value of that type, a spending key that authorizes spending the note’s value, and a unique nullifier derived from the note’s contents.

However, unlike UTXOs, notes are not recorded as part of the public chain state. Instead, the chain contains a state commitment tree, an incremental Merkle tree containing (public) commitments to (private) notes. Creating a note involves creating a new note commitment, and proving that it commits to a valid note. Spending a note involves proving that the spent note was previously included in the state commitment tree, using the spending key to demonstrate spend authorization, and revealing the nullifier, which prevents the same note from being spent twice. Nullifiers that correspond to each spent note are tracked in the nullifier set, which is recorded as part of the public chain state.

Transactions

Transactions describe an atomic collection of changes to the ledger state. Each transaction consists of a sequence of descriptions for various actions1. Each description adds or subtracts (typed) value from the transaction’s value balance, which must net to zero.

Penumbra adapts Sapling’s Spend, which spends a note and adds to the transaction’s value balance, and Output, which creates a new note and subtracts from the transaction’s value balance. Penumbra also adds many new descriptions to support additional functionality:

Transfers

-

Spend descriptions spend an existing note, adding its value to the transaction’s value balance;

-

Output descriptions create a new note, subtracting its value from the transaction’s value balance;

-

Transfer descriptions transfer value out of Penumbra by IBC, consuming value from the transaction’s value balance, and producing an ICS20

FungibleTokenPacketDatafor the counterparty chain;

Staking

-

Delegate descriptions deposit unbonded stake into a validator’s delegation pool, consuming unbonded stake from the transaction’s value balance and producing new notes recording delegation tokens representing the appropriate share of the validator’s delegation pool;

-

Undelegate descriptions withdraw from a validator’s delegation pool, consuming delegation tokens from the transaction’s value balance and producing new notes recording the appropriate amount of unbonded stake;

Governance

-

CreateProposal descriptions are used to propose measures for on-chain governance and supply a deposit, consuming bonded stake from the transaction’s value balance and producing a new note that holds the deposit in escrow;

-

WithdrawProposal descriptions redeem an escrowed proposal deposit, returning it to the transaction’s value balance and immediately withdrawing the proposal.

-

Vote descriptions perform private voting for on-chain governance and declare a vote. This description leaves the value balance unchanged.

Trading

-

Swap descriptions perform the first phase of a swap, consuming tokens of one type from a transaction’s value balance, burning them, and producing a swap commitment for use in the second stage;

-

SwapClaim descriptions perform the second phase of a swap, allowing a user who burned tokens of one type to mint tokens of the other type at the chain-specified clearing price, and adding the new tokens to a transaction’s value balance;

Market-making

-

OpenPosition descriptions open concentrated liquidity positions, consuming value of the traded types from the transaction’s value balance and adding the specified position to the AMM state;

-

ClosePosition descriptions close concentrated liquidity positions, removing the specified position to the AMM state and adding the value of the position, plus any accumulated fees or liquidity rewards, to the transaction’s value balance.

Each transaction also contains a fee specification, which is always transparently encoded. The value balance of all of a transactions actions, together with the fees, must net to zero.

Note that like Zcash Orchard, we use the term “action” to refer to one of a number of possible state updates; unlike Orchard, we do not attempt to conceal which types of state updates are performed, so our Action is an enum.

Governance

Penumbra supports on-chain governance with delegated voting. Validators’ votes are public and act as default votes for their entire delegation pool, while delegators’ votes are private, and override the default vote provided by their validator.

Votes are similar to Cosmos Hub: Yes, No, and Abstain have the same meanings.

However, Penumbra does not have NoWithVeto; instead, a sufficiently high threshold

of “no” votes (determined by a chain parameter) will slash a proposal, which causes

its deposit to be burned. This functions as a spam deterrent.

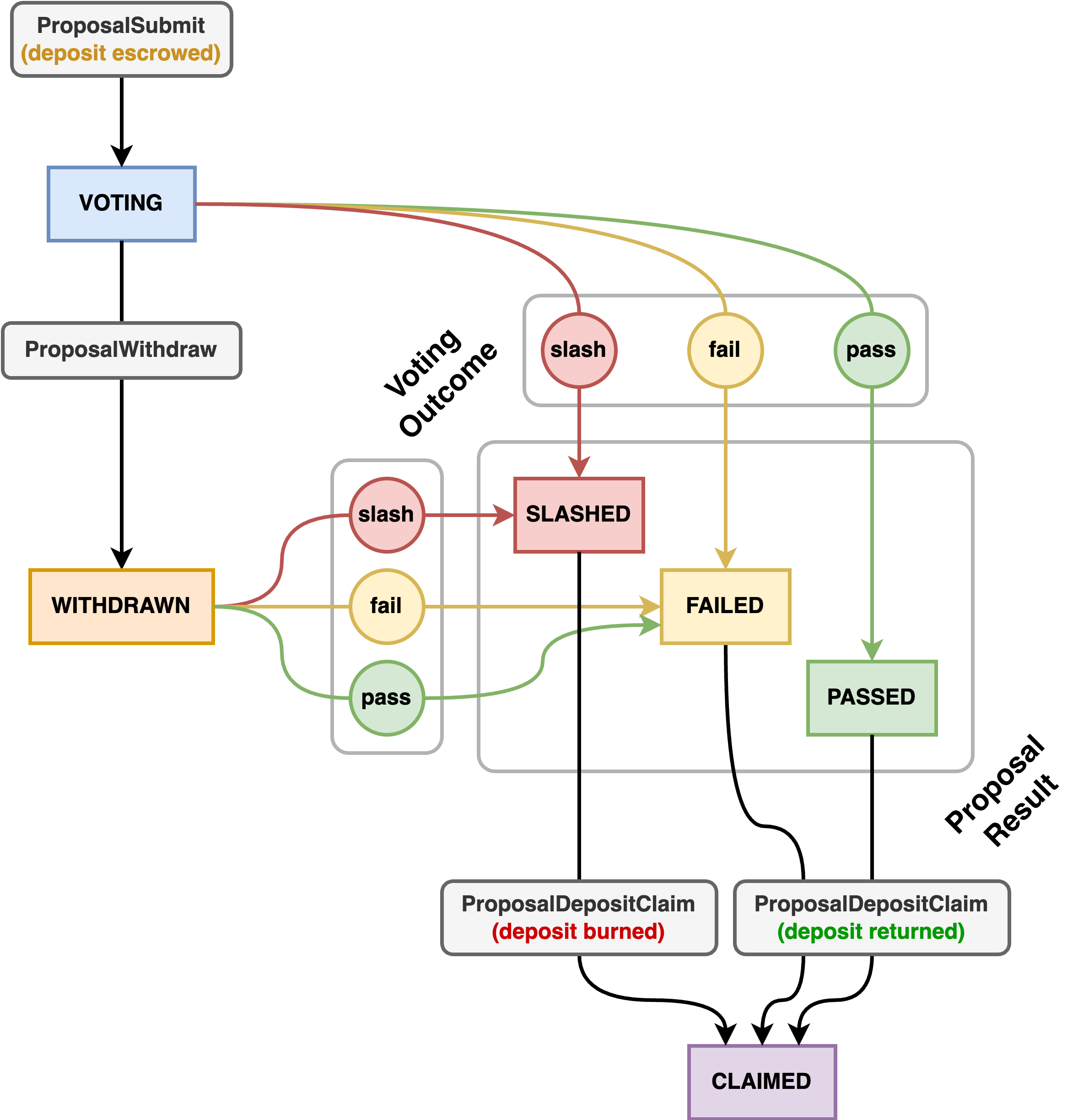

Proposals

Penumbra users can propose votes by escrowing a minimum amount of PEN. They

do this by creating a transaction with a ProposalSubmit description, which

consumes some amount of PEN from the transaction’s balance, and creates a

proposal_N_voting NFT, which can be redeemed for the proposal deposit in the case

that the voting concludes without slashing the proposal (both failed and passed

proposals can reclaim their deposit).

Proposals can either be normal or emergency proposals. In either case, the voting period begins immediately, in the next block after the proposal has been committed to the chain. Normal proposals have a fixed-length voting period, while emergency proposals are accepted as soon as a 1/3 majority of the stake is reached.

Because validators provide default votes for their delegation pool, an emergency proposal can in principle be accepted immediately, without any input from delegators. This allows time-critical resolution of emergencies (e.g., deploying an 0day hotfix); a 1/3 + 1 plurality of the stake required is already sufficient to halt the chain.

Proposals can also be withdrawn by their proposer prior to the end of the voting

period. This is done by creating a transaction with a ProposalWithdraw

description, and allows the community to iterate on proposals as the (social)

governance process occurs. For instance, a chain upgrade proposal can be

withdrawn and re-proposed with a different source hash if a bug is discovered

while upgrade voting is underway. Withdrawn proposals cannot be accepted, even

if the vote would have passed, but they can be slashed.1

Voting

Stakeholder votes are of the form , representing the weights for yes, no, and abstain, respectively. Most stakeholders would presumably set all but one weight to . Stakeholders vote by proving ownership of some amount of bonded stake (their voting power) prior to the beginning of the voting period.

To do this, they create a transaction with a Vote description. This

description identifies the validator and the proposal, proves spend

authority over a note recording dPEN(v), and reveals the note’s nullifier.

Finally, it proves vote consistency , produces a new

note with dPEN(v), and includes , an encryption

of the vote weights to the validators’ decryption key.

The proof statements in a Vote description establishing spend authority over

the note are almost identical to those in a Spend description. However, there

are two key differences. First, rather than checking the note’s

nullifier against the global nullifier set and marking it as spent, the

nullifier is checked against a snapshot of the nullifier set at the time that

voting began (establishing that it was unspent then), as well as against a

per-proposal nullifier set (establishing that it has not already been used for

voting). In other words, instead of marking that the note has been spent in

general, we only mark it as having been spent in the context of voting on a

specific proposal. Second, the ZK proof additionally proves that the note was

created before the proposal started, and the stateful checks ensure that it was not

spent after the proposal started.

This change allows multiple proposals to be voted on concurrently, at the cost

of linkability. While the same note can be used to vote on multiple proposals,

those votes, as well as the subsequent spend of the note, will have the same

nullifier and thus be linkable to each other. However, the Vote descriptions

are shielded, so an observer only learns that two opaque votes were related to

each other.

We suggest that wallets roll over the note the first time it is used for voting

by creating a transaction with Vote, Spend, and Output descriptions. This

mitigates linkability between Vote and Spend descriptions, and means that

votes on any proposals created after the first vote are unlinkable from prior

votes.

Counting Votes

At the end of each epoch, validators collect the encrypted votes from each delegation pool, aggregate the encrypted votes into encrypted tallies and decrypt the tallies. These intermediate tallies are revealed, because it is not possible to batch value flows over time intervals longer than one epoch. In practice, this provides a similar dynamic as existing (transparent) on-chain governance schemes, where tallies are public while voting is ongoing.

At the end of the voting period, the per-epoch tallies are summed. For each validator , the votes for each option are summed to determine the portion of the delegation pool that voted; the validator’s vote acts as the default vote for the rest of the delegation pool. Finally, these per-validator subtotals are multiplied by the voting power adjustment function to obtain the final vote totals.

If the vote was not slashed, the proposal_N_voting NFT created during proposal

submission can be redeemed for the original proposal deposit (if not slashed)

and a commemorative proposal_N_passed, proposal_N_failed, or proposal_N_slashed

NFT.

If withdrawing a proposal halted on-chain voting immediately, the escrow mechanism would not be effective at deterring spam, since the proposer could yank their proposal at the last minute prior to losing their deposit. However, at the UX level, withdrawn proposals can be presented as though voting were closed, since validators’ default votes are probably sufficient for spam deterrence.

Primitives

Penumbra uses the following cryptographic primitives, described in the following sections:

-

The Proof System section describes the choice of proving curve (BLS12-377) and proof system (Groth16, and potentially PLONK in the future);

-

The

decaf377section describesdecaf377, a parameterization of the Decaf construction defined over the BLS12-377 scalar field, providing a prime-order group that can be used inside or outside of a circuit; -

The Poseidon for BLS12-377 section describes parameter selection for an instantiation of Poseidon, a SNARK-friendly sponge construction, over the BLS12-377 scalar field;

-

The Fuzzy Message Detection section describes a construction that allows users to outsource a probabilistic detection capability, allowing a third party to scan and filter the chain on their behalf, without revealing precisely which transactions are theirs.

-

The Homomorphic Threshold Decryption section describes the construction used to batch flows of value across transactions.

-

The Randomizable Signatures section describes

decaf377-rdsa, a variant of the Zcash RedDSA construction instantiated overdecaf377, used for binding and spend authorization signatures. -

The Key Agreement section describes

decaf377-ka, an instantiation of Diffie-Hellman key agreement overdecaf377.

Proving Considerations

Penumbra needs SNARK proofs. Because the choice of proving system and proving curve can’t really be cleanly separated from the rest of the system choices (e.g., the native field of the proving system informs what embedded curve is available, and how circuit programming is done), large parts of the rest of the system design block on making a choice of proving system.

Goals

-

Near-term implementation availability. We’d like to ship a useful product first, and iterate and expand it later.

-

High performance for fixed functionality. Penumbra intends to support fixed functionality initially; programmability is a good future goal but isn’t a near-term objective. The fixed functionality should have as high performance as possible.

-

Longer-term flexibility. The choice should ideally not preclude many future choices for later functionality. More precisely, it should not impose high switching costs on future choices.

-

Recursion capability. Penumbra doesn’t currently make use of recursion, but there are a lot of interesting applications it could be used for.

Setup ceremonies are beneficial to avoid for operational reasons, but not for security reasons. A decentralized setup procedure is sufficient for security.

Options

Proof systems:

- Groth16:

- Pros: high performance, very small proofs, mature system

- Cons: requires a setup for each proof statement

- PLONK:

- Pros: universal setup, still fairly compact proofs, seems to be a point of convergence with useful extensions (plookup, SHPLONK, etc)

- Cons: bigger proofs, worse constants than Groth16

- Halo 2

- Pros: no setup, arbitrary depth recursion

- Cons: bigger proof sizes, primary implementation for the Pallas/Vesta curves which don’t support pairings

Curve choices:

-

BLS12-381:

- Pros: very mature, used by Sapling already

- Cons: no easy recursion

-

BLS12-377:

- Pros: constructed as part of Zexe to support depth 1 recursion using a bigger parent curve, deployed in Celo, to be deployed in Zexe

- Cons: ?

-

Pallas/Vesta:

- Pros: none other than support for Halo 2’s arbitrary recursion

- Cons: no pairings mean they cannot be used for any pairing-based SNARK

Considerations

Although the choice of proof system (Groth16, Plonk, Halo, Pickles, …) is not completely separable from the choice of proving curve (e.g., pairing-based SNARKs require pairing-friendly curves), to the extent that it is, the choice of the proof system is relatively less important than the choice of proving curve, because it is easier to encapsulate.

The choice of proving curve determines the scalar field of the arithmetic circuit, which determines which curves are efficient to implement in the circuit, which determines which cryptographic constructions can be performed in the circuit, which determines what kind of key material the system uses, which propagates all the way upwards to user-visible details like the address format. While swapping out a proof system using the same proving curve can be encapsulated within an update to a client library, swapping out the proving curve is extremely disruptive and essentially requires all users to generate new addresses and migrate funds.

This means that, in terms of proof system flexibility, the Pallas/Vesta curves are relatively disadvantaged compared to pairing-friendly curves like BLS12-381 or BLS12-377, because they cannot be used with any pairing-based SNARK, or any other pairing-based construction. Realistically, choosing them is committing to using Halo 2.

Choosing BLS12-377 instead of BLS12-381 opens the possibility to do depth-1 recursion later, without meaningfully restricting the near-term proving choices. For this reason, BLS12-377 seems like the best choice of proving curve.

Penumbra’s approach is to first create a useful set of fixed functionality, and

generalize to custom, programmable functionality only later. Compared to

Sapling, there is more functionality (not just Spend and Output but

Delegate, Undelegate, Vote, …), meaning that there are more proof

statements. Using Groth16 means that each of these statements needs to have its

own proving and verification key, generated through a decentralized setup.

So the advantage of a universal setup (as in PLONK) over per-statement setup (as in Groth16) would be:

- The setup can be used for additional fixed functionality later;

- Client software does not need to maintain distinct proving/verification keys for each statement.

(2) is a definite downside, but the impact is a little unclear. As a point of reference, the Sapling spend and output parameters are 48MB and 3.5MB respectively. The size of the spend circuit could be improved using a snark-friendly hash function.

With regard to (1), if functionality were being developed in many independent pieces, doing many setups would impose a large operational cost. But doing a decentralized setup for a dozen proof statements simultaneously does not seem substantially worse than doing a decentralized setup for a single proof statement. So the operational concern is related to the frequency of groups of new statements, not the number of statements in a group. Adding a later group of functionality is easy if the first group used a universal setup. But if it didn’t, the choice of per-statement setup initially doesn’t prevent the use of a universal setup later, as long as the new proof system can be implemented using the same curve.

Because Penumbra plans to have an initial set of fixed functionality, and performance is a concern, Groth16 seems like a good choice, and leaves the door open for a future universal SNARK. Using BLS12-377 opens the door to future recursion, albeit only of depth 1.

The decaf377 group

Penumbra, like many other zero-knowledge protocols, requires a cryptographic group that can be used inside of an arithmetic circuit. This is accomplished by defining an “embedded” elliptic curve whose base field is the scalar field of the proving curve used by the proof system.

The Zexe paper, which defined BLS12-377, also defined (called in Figure 16 of the paper) a cofactor-4 Edwards curve defined over the BLS12-377 scalar field for exactly this purpose. However, non-prime-order groups are a leaky abstraction, forcing all downstream constructions to pay attention to correct handling of the cofactor. Although it is usually possible to do so safely, it requires additional care, and the optimal technique for handling the cofactor is different inside and outside of a circuit.

Instead, applying the Decaf construction to this curve gives decaf377, a

clean abstraction that provides a prime-order group complete with hash-to-group

functionality and whose encoding and decoding functions integrate validation.

Although it imposes a modest additional cost in the circuit context, as

discussed in Costs and Alternatives, the

construction works the same way inside and outside of a circuit and imposes no

costs for lightweight, software-only applications, making it a good choice for

general-purpose applications.

Curve Parameters

The cofactor-4 Edwards curve defined over the BLS12-377 scalar field has the following parameters:

- Base field: Integers mod prime

- Elliptic curve equation: with and

- Curve order: where

We use a conventional generator basepoint selected to have a convenient hex encoding:

0x0800000000000000000000000000000000000000000000000000000000000000

In affine coordinates this generator point has coordinates:

Implementation

An implementation of decaf377 can be found here.

Costs and Alternatives

Arithmetic circuits have a different cost model than software. In the software cost model, software executes machine instructions, but in the circuit cost model, relations are certified by constraints. Unfortunately, while Decaf is a clearly superior choice in the software context, in the circuit context it imposes some additional costs, which must be weighed against its benefits.

At a high level, Decaf implements a prime-order group using a non-prime-order curve by constructing a group quotient. Internally, group elements are represented by curve points, with a custom equality check so that equivalent representatives are considered equal, an encoding function that encodes equivalent representatives as identical bitstrings, and a decoding function that only accepts canonical encodings of valid representatives.

To do this, the construction defines a canonical encoding on a Jacobi quartic curve mod its 2-torsion (a subgroup of size 4) by making two independent sign choices. Then, it uses an isogeny to transport this encoding from the Jacobi quartic to a target curve that will be used to actually implement the group operations. This target curve can be an Edwards curve or a Montgomery curve. The isogenies are only used for deriving the construction. In implementations, all of these steps are collapsed into a single set of formulas that perform encoding and decoding on the target curve.

In other words, one way to think about the Decaf construction is as some machinery that transforms two sign choices into selection of a canonical representative. Ristretto adds extra machinery to handle cofactor 8 by making an additional sign choice.

In the software cost model, where software executes machine instructions, this construction is essentially free, because the cost of both the Decaf and conventional Edwards encodings are dominated by the cost of computing an inverse or an inverse square root, and the cost of the sign checks is insignificant.

However, in the circuit cost model, where relations are certified by various constraints, this is no longer the case. On the one hand, certifying a square root or an inverse just requires checking that or that , which is much cheaper than actually computing or . On the other hand, performing a sign check involves bit-constraining a field element, requiring hundreds of constraints.

Sign checks

The definition of which finite field elements are considered nonnegative is essentially arbitrary. The Decaf paper suggests three possibilities:

-

using the least significant bit, defining to be nonnegative if the least absolute residue for is even;

-

using the most significant bit, defining to be nonnegative if the least absolute residue for is in the range ;

-

for fields where , using the Legendre symbol, which distinguishes between square and nonsquare elements.

Using the Legendre symbol is very appealing in the circuit context, since it has an algebraic definition and, at least in the case of square elements, very efficient certification. For instance, if square elements are chosen to be nonnegative, then certifying that is nonnegative requires only one constraint, . However, the reason for the restriction to fields is that and should have different signs, which can only be the case if is nonsquare. Unfortunately, many SNARK-friendly curves, including BLS12-377, are specifically chosen so that for as large a power as possible (e.g., in the case of BLS12-377).

This leaves us with either the LSB or MSB choices. The least significant bit is potentially simpler for implementations, since it is actually the low bit of the encoding of , while the most significant bit isn’t, because it measures from , not a bit position , so it seems to require a comparison or range check to evaluate. However, these choices are basically equivalent, in the following sense:

Lemma.1

The most significant bit of is if and only if the least significant bit of is .

Proof.

The MSB of is if and only if , but this means that , which is even, is the least absolute residue, so the LSB of is also . On the other hand, the MSB of is if and only if , i.e., if , i.e., if . This means that the least absolute residue of is ; since is even and is odd, this is odd and has LSB .

This means that transforming an LSB check to an MSB check or vice versa requires multiplication by or , which costs at most one constraint.

Checking the MSB requires checking whether a value is in the range . Using Daira Hopwood’s optimized range constraints, the range check costs 2. However, the input to the range check is a bit-constrained unpacking of a field element, not a field element itself. This unpacking costs .

Checking the LSB is no less expensive, because although the check only examines one bit, the circuit must certify that the bit-encoding is canonical. This requires checking that the value is in the range , which also costs , and as before, the unpacking costs .

In other words, checking the sign of a field element costs , or in the case where the field element is already bit-encoded for other reasons. These checks are the dominant cost for encoding and decoding, which both require two sign checks. Decoding from bits costs c. , decoding from a field element costs c. , and encoding costs c. regardless of whether the output is encoded as bits or as a field element.

For decaf377, we choose the LSB test for sign checks.

Alternative approaches to handling cofactors

Decaf constructs a prime-order group whose encoding and decoding methods perform validation. A more conventional alternative approach is to use the underlying elliptic curve directly, restrict to its prime-order subgroup, and do subgroup validation separately from encoding and decoding. If this validation is done correctly, it provides a prime-order group. However, because validation is an additional step, rather than an integrated part of the encoding and decoding methods, this approach is necessarily more brittle, because each implementation must be sure to do both steps.

In the software cost model, there is no reason to use subgroup validation, because it is both more expensive and more brittle than Decaf or Ristretto. However, in the circuit cost model, there are cheaper alternatives, previously analyzed by Daira Hopwood in the context of Ristretto for JubJub (1, 2).

Multiplication by the group order.

The first validation method is to do a scalar multiplication and check that . Because the prime order is fixed, this scalar multiplication can be performed more efficiently using a hardcoded sequence of additions and doublings.

Cofactor preimage.

The second validation method provides a preimage in affine coordinates . Because the image of is the prime-order subgroup, checking that satisfies the curve equation and that checks that is in the prime-order subgroup.

In the software context, computing and computing cost about the same, although both are an order of magnitude more expensive than decoding. But in the circuit context, the prover can compute outside of the circuit and use only a few constraints to check the curve equation and two doublings. These costs round to zero compared to sign checks, so the validation is almost free.

The standard “compressed Edwards y” format encodes a point by the -coordinate and a sign bit indicating whether is nonnegative. In software, the cost of encoding and decoding are about the same, and dominated by taking an inverse square root. In circuits, the costs of encoding and decoding are also about the same, but they are instead dominated by a sign check that matches the sign of the recovered -coordinate with the supplied sign bit. This costs c. as above.

Comparison and discussion

This table considers only approximate costs.

| Operation | decaf377 | Compressed Ed + Preimage |

|---|---|---|

| Decode (from bits) | 400C | 400C |

| Decode (from ) | 750C | 325C |

| Encode (to bits) | 750C | 750C |

| Encode (to ) | 750C | 325C |

When decoding from or encoding to field elements, the marginal cost of Decaf compared to the compressed Edwards + cofactor preimage is an extra bit-unpacking and range check. While this effectively doubles the number of constraints, the marginal cost of c. is still small relative to other operations like a scalar multiplication, which at 6 constraints per bit is approximately .

When decoding from or encoding to bits, the marginal cost of Decaf disappears. When the input is already bit-constrained, Decaf’s first sign check can reuse the bit constraints, saving c. , but the compressed Edwards encoding must range-check the bits (which Decaf already does), costing c. extra. Similarly, in encoding, Decaf’s second sign check produces bit-constrained variables for free, while the compressed Edwards encoding spends c. bit-constraining and range-checking them.

However, in the software context, the prime-order validation check costs approximately 10x more than the cost of either encoding. Many applications require use of the embedded group both inside and outside of the circuit, and uses outside of the circuit may have additional resource constraints (for instance, a hardware token creating a signature authorizing delegated proving, etc.).

Performing validation as an additional, optional step also poses additional risks. While a specification may require it to be performed, implementations that skip the check will appear to work fine, and the history of invalid-point attacks (where implementations should, but don’t, check that point coordinates satisfy the curve equation) suggests that structuring validation as an integral part of encoding and decoding is a safer design. This may not be a concern for a specific application with a single, high-quality implementation that doesn’t make mistakes, but it’s less desirable for a general-purpose construction.

In summary, Decaf provides a construction that works the same way inside and outside of a circuit and integrates validation with the encoding, imposing only a modest cost for use in circuits and no costs for lightweight, software-only applications, making it a good choice for general-purpose constructions.

I have no idea whether this is common knowledge; I learned of this fact from its use in Mike Hamburg’s Ed448-Goldilocks implementation.

The value 73 is computed as:

from itertools import groupby

def cost(k):

return min(k-1, 2)

def constraints(bound):

costs = [cost(len(list(g))+1) for (c, g) in groupby(bound.bits()[:-1]) if c == 1]

return sum(costs)

constraints(ZZ((q-1)/2))

as here.

Inverse Square Roots

As in the internet-draft, the decaf377 functions are defined in terms of the

following function, which computes the square root of a ratio of field elements,

with the special behavior that if the input is nonsquare, it returns the square

root of a related field element, to allow reuse of the computation in the

hash-to-group setting.

Define as a non-square in the field and sqrt_ratio_zeta(N,D) as:

- (True, ) if and are nonzero, and is square;

- (True, ) if is zero;

- (False, ) if is zero and is non-zero;

- (False, ) if and are nonzero, and is nonsquare.

Since is nonsquare, if is nonsquare, is

square. Note that unlike the similar function in the

ristretto255/decaf448 internet-draft, this function does not make any

claims about the sign of its output.

To compute sqrt_ratio_zeta we use a table-based method adapted from Sarkar 2020 and zcash-pasta, which is described in the remainder of this section.

Constants

We set (the 2-adicity of the field) and odd such that . For the BLS12-377 scalar field, and .

We define where is a non-square as described above. We fix as 2841681278031794617739547238867782961338435681360110683443920362658525667816.

We then define a and such that . We also define a parameter where . For decaf377 we choose:

Precomputation

Lookup tables are needed which can be precomputed as they depend only on the 2-adicity and the choice of above.

lookup table:

We compute for and , indexed on and :

This table lets us look up powers of .

lookup table:

We compute for , indexed on :

We use this table in the procedure that follows to find (they are the values) in order to compute .

Procedure

In the following procedure, let . We use the following relations from Sarkar 2020:

- Equation 1: and for and

- Lemma 3: for

- Equation 2:

In these expressions, and are field elements. are unsigned -bit integers. At each , the algorithm first computes , then and (from the previous step’s and ), then finally and , in each case such that they satisfy the above expressions. Note that in the algorithm .

Step 1: Compute

We compute . This corresponds to line 2 of the findSqRoot Algorithm 1 in Sarkar 2020.

Substituting :

Applying Fermat’s Little Theorem (i.e. ):

Substituting and rearranging:

We compute using a quantity we define as:

We also define:

And substitute and into which gives us:

We now can use in the computation for and :

Step 2: Compute

Compute using and as calculated in the prior step. This corresponds to line 4 of the findSqRoot Algorithm 1 in Sarkar 2020.

Step 3: Compute

We next compute for . This corresponds to line 5 of the findSqRoot Algorithm 1 in Sarkar 2020. This gives us the following components:

Step 4: Compute and

Next, we loop over . This corresponds to lines 6-9 of the findSqRoot Algorithm 1 in Sarkar 2020.

For

Using Lemma 3:

Substituting the definition of from equation 1:

Rearranging and substituting in (initial condition):

Substituting in equation 2:

This is almost in a form where we can look up in our s lookup table to get and thus . If we define we get:

Which we can use with our s lookup table to get . Expressing in terms of , we get .

For

First we compute using equation 1:

Next, similar to the first iteration, we use lemma 3 and substitute in and to yield:

In this expression we can compute the quantities on the left hand side, and the right hand side is in the form we expect for the s lookup table, yielding us . Note that here too we define such that the s lookup table can be used. Expressing in terms of , we get .

For

The remaining iterations proceed similarly, yielding the following expressions:

Note for and the remaining iterations we do not require a trick (i.e. where ) to get in a form where it can be used with the s lookup table. In the following expressions for , is always even, and so the high bit of each value is unchanged when adding .

At the end of this step, we have found and for .

Step 5: Return result

Next, we can use equation 1 to compute using and from the previous step:

This matches the expression from Lemma 4 in Sarkar 2020.

Next, to compute , we lookup entries in the g lookup table. To do so, we can decompose into:

then is computed as:

Multiplying in from step 1, we compute:

This corresponds to line 10 of the findSqRoot Algorithm 1 in Sarkar 2020.

In the non-square case, will be odd, and will be odd. We will have computed and multiply by a correction to get our desired output.

We can use the result of this computation to determine whether or not the square exists, recalling from Step 1 that :

- If is square, then , and

- If is non-square, then and .

Decoding

Decoding to a point works as follows where and are the curve parameters as described here.

-

Decode

s_bytesto a field element . We interpret these bytes as unsigned little-endian bytes. We check if the length has 32 bytes, where the top 3 bits of the last byte are 0. The 32 bytes are verified to be canonical, and rejected if not (if the input is already a field element in the circuit case, skip this step). -

Check that is nonnegative, or reject (sign check 1).

-

.

-

.

-

(was_square, v) = sqrt_ratio_zeta(1, u_2 * u_1^2), rejecting ifwas_squareis false. -

if is negative (sign check 2)1.

-

.

The resulting coordinates are the affine Edwards coordinates of an internal representative of the group element.

Note this differs from the Decaf paper in Appendix A.2, but

implementations of decaf377 should follow the convention described here.

Encoding

Given a representative in extended coordinates , encoding works as follows where and are the curve parameters as described here.

-

.

-

(_ignored, v) = sqrt_ratio_zeta(1, u_1 * (a - d) * X^2). -

(sign check 1).

-

.

-

.

-

Set

s_bytesto be the canonical unsigned little-endian encoding of , which is an integer mod .s_byteshas extra0x00bytes appended to reach a length of 32 bytes.

Group Hash

Elligator can be applied to map a field element to a curve point. The map can be applied once to derive a curve point suitable for use with computational Diffie-Hellman (CDH) challenges, and twice to derive a curve point indistinguishable from random.

In the following section, and are the curve parameters as described here. is a constant and sqrt_ratio_zeta(v_1,v_2) is a function, both defined in the Inverse Square Roots section.

The Elligator map is applied as follows to a field element :

-

.

-

.

-

.

-

sqrt_ratio_zetawhere is a boolean indicating whether or not a square root exists for the provided input. -

If a square root for does not exist, then and . Else, and is unchanged.

-

.

-

.

-

If ( and is true) or ( and is false) then .

The Jacobi quartic representation of the resulting point is given by . The resulting point can be converted from its Jacobi quartic representation to extended projective coordinates via:

For single-width hash-to-group (encode_to_curve), we apply the above map once. For double-width (hash_to_curve) we apply the map to two field elements and add the resulting curve points.

Test Vectors

Small generator multiples

This table has hex-encodings of small multiples of the generator :

| Element | Hex encoding |

|---|---|

0000000000000000000000000000000000000000000000000000000000000000 | |

0800000000000000000000000000000000000000000000000000000000000000 | |

b2ecf9b9082d6306538be73b0d6ee741141f3222152da78685d6596efc8c1506 | |

2ebd42dd3a2307083c834e79fb9e787e352dd33e0d719f86ae4adb02fe382409 | |

6acd327d70f9588fac373d165f4d9d5300510274dffdfdf2bf0955acd78da50d | |

460f913e516441c286d95dd30b0a2d2bf14264f325528b06455d7cb93ba13a0b | |

ec8798bcbb3bf29329549d769f89cf7993e15e2c68ec7aa2a956edf5ec62ae07 | |

48b01e513dd37d94c3b48940dc133b92ccba7f546e99d3fc2e602d284f609f00 | |

a4e85dddd19c80ecf5ef10b9d27b6626ac1a4f90bd10d263c717ecce4da6570a | |

1a8fea8cbfbc91236d8c7924e3e7e617f9dd544b710ee83827737fe8dc63ae00 | |

0a0f86eaac0c1af30eb138467c49381edb2808904c81a4b81d2b02a2d7816006 | |

588125a8f4e2bab8d16affc4ca60c5f64b50d38d2bb053148021631f72e99b06 | |

f43f4cefbe7326eaab1584722b1b4860de554b23a14490a03f3fd63a089add0b | |

76c739a33ffd15cf6554a8e705dc573f26490b64de0c5bd4e4ac75ed5af8e60b | |

200136952d18d3f6c70347032ba3fef4f60c240d706be2950b4f42f1a7087705 | |

bcb0f922df1c7aa9579394020187a2e19e2d8073452c6ab9b0c4b052aa50f505 |

Hash-to-group

This table has input field elements along with the affine coordinates of the output point after applying the elligator map once:

| Input field element | output point |

|---|---|

2873166235834220037104482467644394559952202754715866736878534498814378075613 | (1267955849280145133999011095767946180059440909377398529682813961428156596086, 5356565093348124788258444273601808083900527100008973995409157974880178412098) |

7664634080946480262422274939177258683377350652451958930279692300451854076695 | (1502379126429822955521756759528876454108853047288874182661923263559139887582, 7074060208122316523843780248565740332109149189893811936352820920606931717751) |

707087697291448463178823336344479808196630248514167087002061771344499604401 | (2943006201157313879823661217587757631000260143892726691725524748591717287835, 4988568968545687084099497807398918406354768651099165603393269329811556860241) |

4040687156656275865790182426684295234932961916167736272791705576788972921292 | (2893226299356126359042735859950249532894422276065676168505232431940642875576, 5540423804567408742733533031617546054084724133604190833318816134173899774745) |

6012393175004325154204026250961812614679561282637871416475605431319079196219 | (2950911977149336430054248283274523588551527495862004038190631992225597951816, 4487595759841081228081250163499667279979722963517149877172642608282938805393) |

7255180635786717958849398836099816771666363291918359850790043721721417277258 | (3318574188155535806336376903248065799756521242795466350457330678746659358665, 7706453242502782485686954136003233626318476373744684895503194201695334921001) |

6609366864829739556945402594963920739176902000316365292959221199804402230199 | (3753408652523927772367064460787503971543824818235418436841486337042861871179, 2820605049615187268236268737743168629279853653807906481532750947771625104256) |

6875465950337820928985371259904709015074922314668494500948688901607284806973 | (7803875556376973796629423752730968724982795310878526731231718944925551226171, 7033839813997913565841973681083930410776455889380940679209912201081069572111) |

Randomizable Signatures

Penumbra’s signatures are provided by decaf377-rdsa, a variant of the Zcash

RedDSA construction instantiated using the decaf377 group.

These are Schnorr signatures, with two additional properties relevant to

Penumbra:

-

They support randomization of signing and verification keys. Spending a note requires use of the signing key that controls its spend authorization, but if the same spend verification key were included in multiple transactions, they would be linkable. Instead, both the signing and verification keys are kept secret1, and each spend description includes a randomization of the verification key, together with a proof that the randomized verification key was derived from the correct spend verification key.

-

They support addition and subtraction of signing and verification keys. This property is used for binding signatures, which bind zero-knowledge proofs to the transaction they were intended for and enforce conservation of value.

decaf377-rdsa

Let be the decaf377 group of prime order . Keys and signatures

are parameterized by a domain . Each domain has an associated generator

. Currently, there are two defined domains: and

. The hash function is instantiated by using blake2b with the personalization string

decaf377-rdsa---, treating the 64-byte output as the little-endian encoding of

an integer, and reducing that integer modulo .

A signing key is a scalar . The corresponding verification key is the group element .

Sign

On input message m with signing key , verification key , and domain :

- Generate 80 random bytes.

- Compute the nonce as .

- Commit to the nonce as .

- Compute the challenge as .

- Compute the response as .

- Output the signature

R_bytes || s_bytes.

Verify

On input message m, verification key A_bytes, and signature sig_bytes:

- Parse from

A_bytes, or fail ifA_bytesis not a valid (hence canonical)decaf377encoding. - Parse

sig_bytesasR_bytes || s_bytesand the components as and , or fail if they are not valid (hence canonical) encodings. - Recompute the challenge as .

- Check the verification equation , rejecting the signature if it is not satisfied.

SpendAuth signatures

The first signature domain used in Penumbra is for spend authorization signatures. The basepoint is the conventional decaf377 basepoint 0x0800000....

Spend authorization signatures support randomization:

Randomize.SigningKey

Given a randomizer , the randomized signing key is .

Randomize.VerificationKey

Given a randomizer , the randomized verification key is .

Randomizing a signing key and then deriving the verification key associated to the randomized signing key gives the same result as randomizing the original verification key (with the same randomizer).

Implementation

An implementation of decaf377-rdsa can be found here.

Binding signatures

The second signature domain used in Penumbra is for binding signatures. The

basepoint is the result of converting

blake2b(b"decaf377-rdsa-binding") to an element and applying

decaf377’s CDH encode-to-curve method.

Since the verification key corresponding to the signing key is , adding and subtracting signing and verification keys commutes with derivation of the verification key, as desired.

This situation is a good example of why it’s better to avoid the terms “public key” and “private key”, and prefer more precise terminology that names keys according to the cryptographic capability they represent, rather than an attribute of how they’re commonly used. In this example, the verification key should not be public, since it could link different transactions.

Simple example: Binding signature

Let’s say we have two actions in a transaction: one spend (indicated with subscript ) and one output (indicated with subscript ).

The balance commitments for those actions are:

where and are generators, are the blinding factors, and are the values.

When the signer is computing the binding signature, they have the blinding factors for all commitments.

They derive the signing key by adding up the blinding factors based on that action’s contribution to the balance:

The signer compute the binding signature using this key .

When the verifier is checking the signature, they add up the balance commitments to derive the verification key based on their contribution to the balance:

If the transaction is valid, then the first term on the LHS () is zero since for Penumbra all transactions should have zero value balance.

This leaves the verifier with the verification key:

If the value balance is not zero, the verifier will not be able to compute the verification key with the data in the transaction.

Key Agreement

Poseidon for BLS12-377

The Poseidon hash function is a cryptographic hash function that operates natively over prime fields. This allows the hash function to be used efficiently in the context of a SNARK. In the sections that follow we describe our instantiation of Poseidon over BLS12-377.

Overview of the Poseidon Permutation

This section describes the Poseidon permutation. It consists of rounds, where each round has the following steps:

AddRoundConstants: where constants (denoted byarcin the code) are added to the internal state,SubWords: where the S-box is applied to the internal state,MixLayer: where a matrix is multiplied with the internal state.

The total number of rounds we denote by . There are two types of round in the Poseidon construction, partial and full. We denote the number of partial and full rounds by and respectively.

In a full round in the SubWords layer the S-box is applied to each element of the

internal state, as shown in the diagram below:

┌───────────────────────────────────────────────────────────┐

│ │

│ AddRoundConstants │

│ │

└────────────┬──────────┬──────────┬──────────┬─────────────┘

│ │ │ │

┌─▼─┐ ┌─▼─┐ ┌─▼─┐ ┌─▼─┐

│ S │ │ S │ │ S │ │ S │

└─┬─┘ └─┬─┘ └─┬─┘ └─┬─┘

│ │ │ │

┌────────────▼──────────▼──────────▼──────────▼─────────────┐

│ │

│ MixLayer │

│ │

└────────────┬──────────┬──────────┬──────────┬─────────────┘

│ │ │ │

▼ ▼ ▼ ▼

In a partial round, in the SubWords layer we apply the S-box only to one element

of the internal state, as shown in the diagram below:

│ │ │ │

│ │ │ │

┌────────────▼──────────▼──────────▼──────────▼─────────────┐

│ │

│ AddRoundConstants │

│ │

└────────────┬──────────────────────────────────────────────┘

│

┌─▼─┐

│ S │

└─┬─┘

│

┌────────────▼──────────────────────────────────────────────┐

│ │

│ MixLayer │

│ │

└────────────┬──────────┬──────────┬──────────┬─────────────┘

│ │ │ │

▼ ▼ ▼ ▼

We apply half the full rounds () first, then we apply the partial rounds, then the rest of the full rounds. This is called the HADES design strategy in the literature.

Poseidon Parameter Generation

The problem of Poseidon parameter generation is to pick secure choices for the parameters used in the permutation given the field, desired security level in bits, as well as the width of the hash function one wants to instantiate (i.e. 1:1 hash, 2:1 hash, etc.).

Poseidon parameters consist of:

- Choice of S-box: choosing the exponent for the S-box layer where ,

- Round numbers: the numbers of partial and full rounds,

- Round constants: the constants to be added in the

AddRoundConstantsstep, - MDS Matrix: generating a Maximum Distance Separable (MDS) matrix to use in the linear layer, where we multiply this matrix by the internal state.

Appendix B of the Poseidon paper provides sample implementations of both the Poseidon

permutation as well as parameter generation. There is a Python script called

calc_round_numbers.py which provides the round numbers given the security level

, the width of the hash function , as well as the choice of used

in the S-box step. There is also a Sage script, which generates the round numbers,

constants, and MDS matrix, given the security level , the width of the hash

function , as well as the choice of used in the S-box step.

Since the publication of the Poseidon paper, others have edited these scripts, resulting in a number of implementations being in use derived from these initial scripts. We elected to implement Poseidon parameter generation in Rust from the paper, checking each step, and additionally automating the S-box parameter selection step such that one can provide only the modulus of a prime field and the best will be selected.

Below we describe where we deviate from the parameter selection procedure described in the text of the Poseidon paper.

Choice of S-Box

The Poseidon paper focuses on the cases where , as well as BLS12-381 and BN254. For a choice of positive , it must satisfy , where is the prime modulus.

For our use of Poseidon on BLS12-377, we wanted to generate a procedure for selecting the optimal for a general curve, which we describe below.

We prefer small, positive for software (non-circuit) performance, only using when we are unable to find an appropriate positive . For positive , the number of constraints in an arithmetic circuit per S-Box is equal to its depth in a tree of shortest addition chains. For a given number of constraints (i.e. at a given depth in the tree), we pick the largest at that level that meets the GCD requirement. Since larger provides more security, choosing the largest at a given depth reduces the round requirement.

The procedure in detail:

We proceed down the tree from depth 2 to depth 5 (where depth 0 is the root of 1):

- At a given depth, proceed from largest number to smaller numbers.

- For a given element, check if is satisfied. If yes, we choose it, else continue.

If we get through these checks to depth of 5 without finding a positive exponent for , then we pick , which is well-studied in the original Poseidon paper.

For decaf377, following this procedure we end up with .

Round Numbers

We implement the round numbers as described in the original paper. These are the number of rounds necessary to resist known attacks in the literature, plus a security margin of +2 full rounds, and +7.5% partial rounds.

We test our round number calculations with tests from Appendices G and H from the paper which contain concrete instantiations of Poseidon for and their round numbers.

Round Constants

We do not use the Grain LFSR for generating pseudorandom numbers as described in Appendix F of the original paper. Instead, we use a Merlin transcript to enable parameter generation to be fully deterministic and easily reproducible.

We first append the message "poseidon-paramgen" to the transcript

using label dom-sep.

We then bind this transcript to the input parameter choices:

- the width of the hash function using label

t, - the security level using label

M, and - the modulus of the prime field using label

p.

We then also bind the transcript to the specific instance, as done with the Grain LFSR in Appendix F, so we bind to:

- the number of full rounds using label

r_F, - the number of partial rounds using label

r_P, and - the choice of S-box exponent using label

alpha.

We generate random field elements from hashes of this complete transcript of all the input parameters and the derived parameters , , and .

Each round constant is generated by obtaining challenge bytes from the Merlin transcript, derived

using label round-constant. We obtain bytes where is the

field size in bits. These bytes are then interpreted as an unsigned little-endian

integer reduced modulo the field.

MDS Matrix

We generate MDS matrices using the Cauchy method. However instead of randomly sampling the field as described in the text, we deterministically generate vectors and as:

Each element of the matrix is then constructed as:

where .

This deterministic matrix generation method has been verified to be safe over the

base field of decaf377, using algorithms 1-3 described in Grassi, Rechberger

and Schofnegger 2020 over the t range 1-100.

The parameters that are used for poseidon377 are located in the params.rs

module of the source code. The parameters were generated on an Apple M1.

Test Vectors

The following are test vectors for the poseidon377 Poseidon instantiation.

Each section is for a given rate of a fixed-width hash function, where

capacity is 1. Inputs and output are elements. The domain separator

used in each case are the bytes "Penumbra_TestVec" decoded to an element,

where we interpret these bytes in little-endian order.

Rate 1

Input element:

7553885614632219548127688026174585776320152166623257619763178041781456016062

Output element:

2337838243217876174544784248400816541933405738836087430664765452605435675740

Rate 2

Input elements:

75538856146322195481276880261745857763201521666232576197631780417814560160622337838243217876174544784248400816541933405738836087430664765452605435675740

Output element:

4318449279293553393006719276941638490334729643330833590842693275258805886300

Rate 3

Input elements:

755388561463221954812768802617458577632015216662325761976317804178145601606223378382432178761745447842484008165419334057388360874306647654526054356757404318449279293553393006719276941638490334729643330833590842693275258805886300

Output element:

2884734248868891876687246055367204388444877057000108043377667455104051576315

Rate 4

Input elements:

7553885614632219548127688026174585776320152166623257619763178041781456016062233783824321787617454478424840081654193340573883608743066476545260543567574043184492792935533930067192769416384903347296433308335908426932752588058863002884734248868891876687246055367204388444877057000108043377667455104051576315

Output element:

5235431038142849831913898188189800916077016298531443239266169457588889298166

Rate 5

Input elements:

75538856146322195481276880261745857763201521666232576197631780417814560160622337838243217876174544784248400816541933405738836087430664765452605435675740431844927929355339300671927694163849033472964333083359084269327525880588630028847342488688918766872460553672043884448770570001080433776674551040515763155235431038142849831913898188189800916077016298531443239266169457588889298166

Output element:

66948599770858083122195578203282720327054804952637730715402418442993895152

Rate 6

Input elements:

7553885614632219548127688026174585776320152166623257619763178041781456016062233783824321787617454478424840081654193340573883608743066476545260543567574043184492792935533930067192769416384903347296433308335908426932752588058863002884734248868891876687246055367204388444877057000108043377667455104051576315523543103814284983191389818818980091607701629853144323926616945758888929816666948599770858083122195578203282720327054804952637730715402418442993895152

Output element:

6797655301930638258044003960605211404784492298673033525596396177265014216269

Fuzzy Message Detection

By design, privacy-preserving blockchains like Penumbra don’t reveal metadata about the sender or receiver of a transaction. However, this means that users must scan the entire chain to determine which transactions relate to their addresses. This imposes large bandwidth and latency costs on users who do not maintain online replicas of the chain state, as they must “catch up” each time they come online by scanning all transactions that have occurred since their last activity.

Alternatively, users could delegate scanning to a third party, who would monitor updates to the chain state on their behalf and forward only a subset of those updates to the user. This is possible using viewing keys, as in Zcash, but viewing keys represent the capability to view all activity related to a particular address, so they can only be delegated to trusted third parties.

Instead, it would be useful to be able to delegate only a probabilistic detection capability. Analogous to a Bloom filter, this would allow a detector to identify all transactions related to a particular address (no false negatives), while also identifying unrelated transactions with some false positive probability. Unlike viewing capability, detection capability would not include the ability to view the details of a transaction, only a probabilistic association with a particular address. This is the problem of fuzzy message detection (FMD), analyzed by Beck, Len, Miers, and Green in their paper Fuzzy Message Detection, which proposes a cryptographic definition of fuzzy message detection and three potential constructions.

This section explores how Penumbra could make use of fuzzy message detection:

-

In Sender and Receiver FMD, we propose a generalization of the original definition where the false positive probability is set by the sender instead of the receiver, and discusses why this is useful.

-

In Constructing S-FMD, we realize the new definition using a variant of one of the original FMD constructions, and extend it in two ways:

- to support arbitrarily precise detection with compact, constant-size keys;

- to support diversified detection, allowing multiple, publicly unlinkable addresses to be scanned by a single detection key.

Unfortunately, these extensions are not mutually compatible, so we only use the first one, and record the second for posterity.

- In S-FMD Threat Model, we describe the threat model for S-FMD on Penumbra.

- In S-FMD in Penumbra, we describe how S-FMD is integrated into Penumbra.

- In Parameter Considerations, we discuss how the false positive rates should be chosen.